Tag: ARTICLE

Model Evasion in the context of machine learning for cybersecurity refers to the tactical manipulation of input data, algorithmic processes, or outputs to mislead or subvert the intended operations of a machine learning model. In mathematical terms, evasion can be considered an optimization problem, where the objective is to minimize or maximize a certain loss function without altering the essential characteristics of the input data. This could involve modifying the input data x such that f(x) does not equal the true label y, where f is the classifier and x is the input vector.

While CRQCs capable of breaking current public key encryption algorithms have not yet materialized, technological advancements are pushing us towards what is ominously dubbed 'Q-Day'—the day a CRQC becomes operational. Many experts believe that Q-Day, or Y2Q as it's sometimes called, is just around the corner, suggesting it could occur by 2030 or even sooner; some speculate it may already exist within secret government laboratories.

Homomorphic Encryption has transitioned from being a mathematical curiosity to a linchpin in fortifying machine learning workflows against data vulnerabilities. Its complex nature notwithstanding, the unparalleled privacy and security benefits it offers are compelling enough to warrant its growing ubiquity. As machine learning integrates increasingly with sensitive sectors like healthcare, finance, and national security, the imperative for employing encryption techniques that are both potent and efficient becomes inescapable.

Data poisoning is a targeted form of attack wherein an adversary deliberately manipulates the training data to compromise the efficacy of machine learning models. The training phase of a machine learning model is particularly vulnerable to this type of attack because most algorithms are designed to fit their parameters as closely as possible to the training data. An attacker with sufficient knowledge of the dataset and model architecture can introduce 'poisoned' data points into the training set, affecting the model's parameter tuning. This leads to alterations in the model's future performance that align with the attacker’s objectives, which could range from making incorrect predictions and misclassifications to more sophisticated outcomes like data leakage or revealing sensitive information.

Semantic adversarial attacks represent a specialized form of adversarial manipulation where the attacker focuses not on random or arbitrary alterations to the data but specifically on twisting the semantic meaning or context behind it. Unlike traditional adversarial attacks that often aim to add noise or make pixel-level changes to deceive machine learning models, semantic attacks target the inherent understanding of the data. For example, instead of just altering the color of an image to mislead a visual recognition system, a semantic attack might mislabel the image to make the model believe it's seeing something entirely different.

The AI alignment problem sits at the core of all future predictions of AI’s safety. It describes the complex challenge of ensuring AI systems act in ways that are beneficial and not harmful to humans, aligning AI goals and decision-making processes with those of humans, no matter how sophisticated or powerful the AI system becomes. Our trust in the future of AI rests on whether we believe it is possible to guarantee alignment.

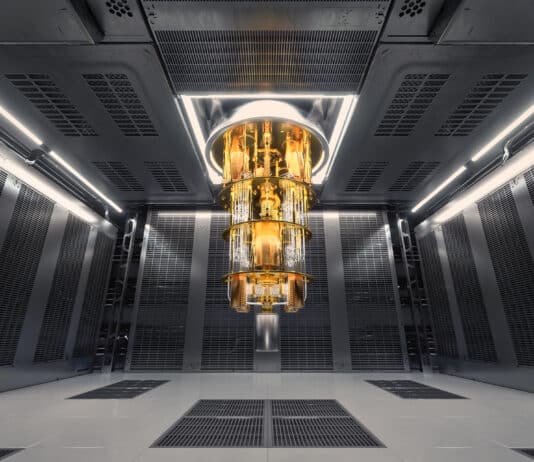

Fidelity in quantum computing measures the accuracy of quantum operations, including how effectively a quantum computer can perform calculations without errors. In quantum systems, noise and decoherence can degrade the coherence of quantum states, leading to errors and reduced computational accuracy. Errors are not just common; they're expected. Quantum states are delicate, easily disturbed by external factors like temperature fluctuations, electromagnetic fields, and even stray cosmic rays.

"Harvest Now, Decrypt Later" (HNDL), also known as "Store Now, Decrypt Later" (SNDL), is a concerning risk where adversaries collect encrypted data with the intent to decrypt it once quantum computing becomes capable of breaking current encryption methods. This is the quantum computing's ticking time bomb, with potential implications for every encrypted byte of data currently considered secure.

The transition to post-quantum cryptography is a complex, multi-faceted process that requires careful planning, significant investment, and a proactive, adaptable approach. By addressing these challenges head-on and preparing for the dynamic cryptographic landscape of the future, organizations can achieve crypto-agility and secure their digital assets against the emerging quantum threat.

As early as the mid-19th century, Charles Babbage and Ada Lovelace created the Analytical Engine, a mechanical general-purpose computer. Lovelace is often credited with the idea of a machine that could manipulate symbols in accordance with rules and that it might act upon other than just numbers, touching upon concepts central to AI.

While ML offers extensive benefits, it also presents significant challenges, among them, one of the most prominent ones is biases in ML models. Bias in ML refers to systematic errors or influences in a model's predictions that lead to unequal treatment of different groups. These biases are problematic as they can reinforce existing inequalities and unfair practices, translating to real-world consequences like discriminatory hiring or unequal law enforcement, thus creating environments of injustice and inequality.

In my work with various clients, I frequently encounter a significant misunderstanding about the scope of preparations required to become quantum ready. Many assume that the transition to a post-quantum world will be straightforward, involving only minor patches to a few systems or simple upgrades to hardware security modules (HSMs). Unfortunately, this is a dangerous misconception. Preparing for this seismic shift is far more complex than most realize.

Adversarial attacks specifically target the vulnerabilities in AI and ML systems. At a high level, these attacks involve inputting carefully crafted data into an AI system to trick it into making an incorrect decision or classification. For instance, an adversarial attack could manipulate the pixels in a digital image so subtly that a human eye wouldn't notice the change, but a machine learning model would classify it incorrectly, say, identifying a stop sign as a 45-mph speed limit sign, with potentially disastrous consequences in an autonomous driving context.

Gradient-based attacks refer to a suite of methods employed by adversaries to exploit the vulnerabilities inherent in ML models, focusing particularly on the optimization processes these models utilize to learn and make predictions. These attacks are called “gradient-based” because they primarily exploit the gradients, mathematical entities representing the rate of change of the model’s output with respect to its parameters, computed during the training of ML models. The gradients act as a guide, showing the direction in which the model’s parameters need to be adjusted to minimize the error in its predictions. By manipulating these gradients, attackers can cause the model to misbehave, make incorrect predictions, or, in extreme cases, reveal sensitive information about the training data.

For the Big 4, this moment represents not just a challenge but an existential threat. Their traditional models, deeply rooted in hierarchical structures and slow-moving and risk-averse processes, leave them especially vulnerable to the pace of AI-driven change. If these firms cannot embrace AI and adapt quickly enough to meet the demands of a flipped industry—redefining their value proposition, overhauling their delivery models, and rethinking their leadership structures—then perhaps their time has passed.